|

A Cognition Briefing Contributed by: Xabier Barandiaran, University of the Basque Country

Overview

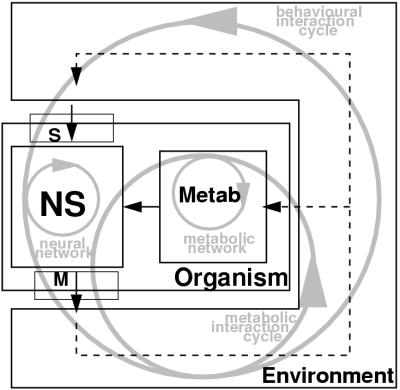

Fig. 1: Understanding cognitive systems as autonomous requires that we model their functioning as system-environment loops that contribute to self-maintenance. Metabolic self-production and behaviour appear intimatly related.

Introduction

All organisms appear endowed with the capacity to continuously generate and regenerate their component processes, to set their own goals without external control and to adaptively and selectively modify their internal and interactive processes accordingly. It is their autonomy that strikes as a characteristic yet elusive property: i.e. their capacity to create their own norms, constraints and regulation in a recurrent way, creatively playing with the laws of physics and chemistry against thermodynamic inertia, while continuously reproducing their own identity. Since early Aristotelian conceptualization of nature, organisms have been distinguished by the holistic dependence between matter, form and function in them; by their unity as tightly integrated systems, possibly with an intrinsic teleology, a continuous regeneration of its ''raison d'Ítre'', of its causes. Kant was among the firsts to recognize the challenge that the understanding of such systems supposed for the human mechanistic mind set-up (specially after its shaping by Cartesian rational materialism and Newtonian corpuscular physicalism). Yet Spinoza built good part of his philosophy of nature on top of the notion of conatus: organismsí intrinsic drive towards self-assertion and self-maintenance. While early modern biologists (Shawn, Goethe and the early naturalists) focused on the cell as a unitary whole endowed with fascinating properties (far beyond what the mechanics of the time could achieve) the fast growing science of chemistry triggered a long standing debate between vitalists and reductionists and the mainstream scientific community was soon laden to the fast-growing achievements of reductionist-atomistic methodologies. It is not until the development of some early-cyberneticist ideas during the 70s that the concept of autonomy (closely related to the notion of network, self-organization and closure) came to be reformulated so as to be introduced back again into the scientific discourse. Today, the concept of autonomy (striped away from its vitalistic connotations) stands, for many, at the center of the sciences of complexity, systems biology, robotics and artificial life. But autonomy as a property or condition of the organism is not easy to specify and make explicit. The term autonomy in relation to biology, adaptive behaviour and cognition has been used in many different ways ranging from a foundational notion for biological organization (Varela, 1979) to a label for a set of engineering constraints in robotics (Maes, 1991), but there is still no clear cuantitative definition of autonomy so that it can be directly applied to a dynamical modelling and simulation of adaptive behaviour. In addition the relation between autonomy, adaptation, its biological grounding and its robotic application is often conflictive and misleading in the literature.

Autonomy across the Literature. A Quick Overview

The practice of autonomous robotics has forced some engineers to go beyond the specification of a list of engineering constraints and to develop a more elaborated notion of autonomy that specifies the kind of interaction process that is stablished between the robot and its physical environment, the dynamic structure and properties of the controll mechanisms and the underlaying consequences for cognitive science and epistemology. That is the case of engineers like Tim Smithers (1997,1995) who has developed a theory of behavioural autonomy in robots challenging information processing approaches to cognition, Randall Beer (1997,1995) challenging representational approaches to cognition or Eric Prem (2000,1997) who has put the emphasys on epistemic autonomy "the system's own ability to decide upon the validity of measurements" (Prem, 1997) a process that cannot be reduced to formal aspects, given the physicality of the measuring process, its pre-formal nature. This authors have been greatly influenced by the biologist Francisco Varela whose definition of autonomy goes as follows: "Autonomous systems are mechanistic (dynamic) systems defined as a unity by their organization. We shall say that autonomous systems are organizationally closed. That is, their organization is characterized by processes shuch that (1) the processes are related as a network, so that they recursively depend on each other in the generation and realization of the processes themselves, and (2) they constitute the system as a unity recognizable in the space (domain) in which the processes exist." (Varela, 1979, p.55). Autonomy is thus defined as an abstract systemic kind of organization (relation between components and processes in a system), a kind of self-maintained, self-reinforced and self-regulated system dynamics resulting from a highly recursive network of processes that generates and maintains internal invariants in the face of internal and external perturbations, a process that separates itself from the environment, defining its identity; i.e. its unity as a system distinguishable from the surrounding processes. This abstract notion of autonomy is realized at different biological scales and domains defining different levels. As a paradigmatic example "life" is defined as a special kind of autonomy: autopoiesis or autonomy in the physical space. In turn "adaptive behaviour" is the result of a higher level of autonomy: that of the nervous system, producing invariant patterns of sensorimotor correlations and defining the behaving organism as a mobile unit in space. But Varela's perspective on autonomy (although highly influential) has been recently critised by its emphasis on closure and the secondary role that system-environment interactions play in the definition and constitution of autonomous systems. Introducing ideas from complexity theory and thermodynamics authors like Bickhard (2000), Christensen and Hooker (2000), Collier (1999,2002), and Moreno and Ruiz-Mirazo (2000), have defended a more specific notion of autonomy as a recursively self-maintaining far-from-equilibrium and thermodynamically open system. The interactive side of autonomy is essential in the definition: autonomous systems must interact continuously to assure the necessary flow of matter and energy for their self-maintenance. The philosophical consequences derived from the nature of autonomous systems is highlighted by these authors, summarized in Collier's words: "No meaning without intention; no intention without function; no function without autonomy." (Collier, 1999). Autonomy is made the naturalized basis for functionality, intentionality, meaning and normativity1. But it is not always clear what the relation between basic (lower level thermodynamic) autonomy and adaptive behaviour is or how such thermodynamic processes interact with neural mechanisms. It is even argued that dynamical system theory (and thus computational simulation models) cannot capture the kind of organization that autonomy is or that robots should be self-constructive in order to be "truly" autonomous.

Key relevant Issues for Cognitive Science

Current Research Areas Dealing with Cognitive Autonomy

References

Brooks, R. A. (1991) Intelligence without representation. Artificial Intelligence Journal, 47:139-160. Christensen, W. and Hooker, C. (2002). Self-directed agents. Contemporary Naturalist Theories of Evolution and Intentionality, Canadian Journal of Philosophy, 31 (special issue). Collier, J. (2002) What is autonomy? In International Journal of Computing Anticipatory Systems: CASY 2001 - Fifth International Conference. Di Paolo, E. (2003). Organismically inspired robotics. In Murase, K. and Asakura, T., editors, Dynamical Systems Approach to Embodiment and Sociality, pages 19-42. Advanced Knowledge International, Adelaide, Australia. Maes, P., editor (1991). Designing Autonomous Agents. MIT Press. Maturana, H. and Varela, F. (1980). Autopoiesis. The realization of the living. In Maturana, H. and Varela, F., editors, Autopoiesis and Cognition. The realization of the living, pages 73-138. D. Reidel Publishing Company, Dordrecht, Holland. Prem, E. (1997). Epistemic autonomy in models of living systems. In Proceedings of the Fourth European Conference on Artificial Life. MIT Press, Bradford Books. Prem, E. (2000). Changes of representational AI concepts induced by embobied autonomy. Communication and Cognition - Artificial Intelligence, 17(3-4):189-208. Special Issue on: The contribution of artificial life and the sciences of complexity to the understanding of autonomous systems. Guest Editors: Arantza Exteberria, Alvaro Moreno, Jon Umerez. Ruiz-Mirazo, K. and Moreno, A. (2000). Searching for the Roots of Autonomy: the natural and artificial paradigms revisited. Artificial Intelligence, 17 (3-4) Special issue:209-228. Smithers, T. (1995). Are autonomous systems information processing systems? In Steels, L. and Brooks, R., editors, The artificial life route to artificial intelligence: Building situated embodied agents. New Haven. Erlbaum. Smithers, T. (1997). Autonomy in Robots and Other Agents. Brain and Cognition, (34):88-106. Varela, F. (1979). Principles of Biologicall Autonomy. North-Holland, New York. This briefing is based on an early draft of Barandiaran, X. (2004) Behavioral Adaptive Autonomy. A milestone in the Alife route to AI? Proceedings of the 9th International Conference on Artificial Life. MIT Press, Cambridge: MA, pp. 514--521.

|