An iRobot Create 2 mobile robot and a Lynxmotion AL5D five degree of freedom robot manipulator using in this course.

Course Description | Learning Objectives | Outcomes | Lecture Notes | Course Textbook | Recommended Reading | Software | Resources | Acknowledgements

Course Description

Robotics is the discipline that is concerned with physical, autonomous systems that can can sense their environment and can act on it to achieve goals, either by moving around their environment, or by moving objects in their environment, or both. This course explains how they do this, covering theoretical knowledge required to sense the state of the robot and its environment, and control the robot to accomplish its tasks. This theory is put into practice throughout the course using robot simulators and physical robots. Students will gain a greater understanding of each topic through a series of individual and group assignments and exercises, using the principles they have learned to write software that allows a robot to navigate to given targets and manipulate objects based on visual sensing. This course is an ideal foundation for further study in the field of robotics. Student progress is assessed by a series of multiple choice tests and written individual & group assignments. There are no prerequisites for taking the course.

Learning Objectives

Students will be introduced to the different types of robot and the components, effectors, actuators, sensors, and control systems that are used for visual sensing, locomotion, and object manipulation. For mobile robots, students will learn the main principles of odometry, position estimation, kinematics, inverse kinematics, control, locomotion, path planning, and navigation. For robot manipulators, they will learn the theory required for pose specification, object manipulation, and task-level robot programming. For visual sensing, students will learn the fundamentals of image processing, image analysis, feature extraction, classification, camera calibration, and 2D & 3D computer vision. They will learn how to program robots using ROS (Robot Operating System), C/C++, and OpenCV, putting into practice in the lab what they have learned in the classroom.

Outcomes

After completing this course, students will be able to do the following.

Lecture Notes

Module 1: Introduction and Robot Components

Module 2: The Robot Operating System (ROS)

Module 3: Mobile Robots

Module 4: Robot Manipulators

Module 5: Robot Vision

At present, there is no single textbook that covers all the material in this course. Instead, we will use selected chapters of the books in the recommended reading below.

Recommended Reading

Corke, P. (2023). Robotics, Vision and Control, 3rd Edition, Springer.

Mataric, M. The Robotics Primer , MIT Press, 2007.

Murphy, R. Introduction to AI Robotics, MIT Press, 2000.

O'Kane, J. M. (2018). A Gentle Introduction to ROS.

Paul, R. (1981). Robot Manipulators: Mathematics, Programming, and Control. MIT Press.

R. Siegwart, I. Nourbakhsh, D. Scaramuzza, Introduction to Autonomous Mobile Robots, MIT Press, 2nd Edition, 2011.

Szeliski, R. (2010). Computer Vision: Algorithms and Applications, Springer.

Vernon, D. (1991). Machine Vision: Automated Visual Inspection and Robot Vision, Prentice-Hall.

Bartneck, C., Belpaeme, T., Eyssel, F., Kanda, T., Keijsers, M., Sabanovic, S. (2020). Human-Robot Interaction - An Introduction, Cambridge University Press. Chapter 3: How a Robot Works.

Software Development Environment

Lecture 1 in Module 2 has detailed instructions for installing the software required for the various exercises in the course.

The simulator for the Lynxmotion AL5D robot manipulator for Module 4 and the ROS example programs for Modules 2, 3, 4, and 5 are available on the course GitHub repository github.com/cognitive-robotics-course.

Resources

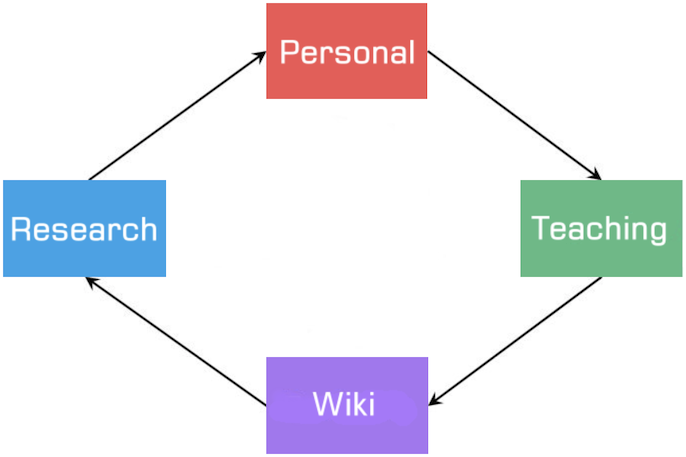

Additional material can be found on my wiki.

Acknowledgements

I wish to acknowledge with thanks the support I received from the IEEE Robotics and Automation Society under the program Creation of Educational Material in Robotics and Automation (CEMRA) 2020 for the development of a course on cognitive robotics, from which a significant amount of the material in this course was derived.

This course was developed over a five year period leading up to and during the time I worked at Carnegie Mellon University Africa in Rwanda, starting in 2017. My thanks go to the students there, several of whom have contributed directly or indirectly to the material. Their deep interest and searching questions made all the difference.

Special thanks go to Vinny Adjibe, Abrham Gebreselasie, Innocent Mukoki, Ribeus Munezero, and Timothy Odonga for their work developing material and tools for the course during their Summer 2020 CMU-Africa internships.

My thanks go to Carnegie Mellon University Africa for its generous support in sponsoring the internships and teaching assistantships, and supplementing the funds provided by the IEEE Robotics and Automation Society to support Derek Odonkor's visit to the University of Bremen.

The module on mobile robots benefitted greatly from a course developed by Alessandro Saffiotti, Örebro University, Sweden, on Artificial Intelligence Techniques for Mobile Robots. I borrowed heavily from this material, whilst creating my own illustrations and diagrams.

The module on robot vision is a very short version of my course on applied computer vision which, in turn, drew inspiration from several sources, including courses given by Kenneth Dawson-Howeat Trinity College Dublin, Kris Kitani at Carnegie Mellon University, Francesca Odone at University of Genova, and Markus Vincze at Technische Universitat Wien. Many of the OpenCV examples are taken from Kenneth Dawson-Howe's book

A Practical Introduction to Computer Vision with OpenCV and the code samples.

All images and diagrams are either original or have their source credited. My apologies in advance for any unintended omissions. Technical drawings were produced in LaTeX using TikZ and the 3D Plot package.

David Vernon, Institute for Artificial Intelligence, University of Bremen, Germany, and Carnegie Mellon University Africa, Rwanda.

Lecture 1. Course overview. What is a robot? Types of robot. The many areas of robotics.

Lecture 2. A short history of robotics.

Lecture 3. Physical embodiment, sensors.

Lecture 4. Actuators.

Lecture 5. Effectors for locomotion and manipulation.

Lecture 6. Control systems; closed-loop control and PID control.

Lecture 1. Installation and overview of the software development environment for examples, exercises, and assignments.

Lecture 2. Introduction to ROS (Robot Operating System); the Turtlesim turtlebot simulator.

Lecture 3. Writing ROS software in C++: publishers and subscribers.

Lecture 4. Writing ROS software in C++: services.

Lecture 1. Locomotion vs navigation; challenges of navigation: localization, search, path planning, planning, coverage, SLAM.

Lecture 2. Absolute position estimation.

Lecture 3. Relative position estimation using inertial sensors.

Lecture 4. Relative position estimation using odometry.

Lecture 5. Kinematics of a two-wheel differential drive robot.

Lecture 6. The go-to-position problem; divide-and-conquer controller.

Lecture 7. The go-to-position and go-to-pose problems; MIMO controllers.

Lecture 8. Path planning: the wavefront algorithm to find a shortest path on a map using breadth-first search for unweighted graphs.

Lecture 9. Path planning: Dijkstra's algorithm for weighted graphs.

Lecture 10. Path planning: A* algorithm; other search approaches.

Lecture 1. Robot programming; coordinate frames of reference and homogenous transformations.

Lecture 2. Object pose specification with homogenous transformations and vectors & quaternions.

Lecture 3. Robot programming by frame-based task specification.

Lecture 4. Pick-and-place example of task-level robot programming.

Lecture 5. Implementation of the pick-and-place example for a Lynxmotion AL5D robot arm using the Frame class in C++.

Lecture 6. Forward kinematics; Denavit-Hartenberg representation; forward kinematics of the LynxMotion AL5D arm.

Lecture 7. Inverse kinematics; analytical vs. numerical solution to inverse kinematics; Inverse kinematics of the LynxMotion AL5D arm.

Lecture 1. Computer vision; optics and sensors; image acquisition; image representation.

Lecture 2. Image processing.

Lecture 3. Introduction to OpenCV.

Lecture 4. Segmentation; region-based approaches; feature-based thresholding; graph cuts.

Lecture 5. Segmentation; boundary-based approaches; edge detection.

Lecture 6. Image analysis; feature extraction.

Lecture 7. K-nearest neighbour, minimum distance, linear, maximum likelihood and Bayes classifiers.

Lecture 8. Perspective transformation; camera model; camera calibration.

Lecture 9. Inverse perspective transformation.

Lecture 10. Stereo vision; epipolar geometry.

August 2021.